What would my preferred AI future look like?

About this school story

Malyn Mawby, Head of Personalised Learning at Roseville College, explains how she implemented project-based learning (PBL) with her year 10 class to explore Artificial Intelligence (AI). Through the PBL task, students selected an area of interest and investigated what is possible, probable, and preferred.

Year band: 9-10

Our focus

“As students progress through the Technologies curriculum, they will begin to identify possible and probable futures, and their preferences for the future…Students will learn to recognise that views about the priority of the benefits and risks will vary and that preferred futures are contested.” (ACARA)

The overarching idea of the current Digital Technologies national curriculum is ‘Creating preferred futures’. This is a really exciting idea that potentially makes learning engaging, relevant, and ultimately purposeful!

While NSW is yet to implement the national curriculum in Stage 5 (Years 9 and 10), the current Information & Software Technologies (IST) syllabus has sufficient outcomes, content, and flexibility to incorporate this overarching idea. It is possible to explore emerging technologies such as Artificial Intelligence (AI) and see what is possible, probable, and preferred depending on its impact and chosen perspective. This was the focus of this project-based learning (PBL) with year 10 IST.

What is possible?

Starting with a general overview of AI, students proceeded to do a deep dive, via a case study, into specific emerging AI areas of their choice. Choice was not only informed by personal interests, it was also influenced by the availability of research and ease of comprehension, in terms of concepts as well as language used. It was important to find resources that showed what was on the cusp of public domain to facilitate extrapolation into what was probable in the future. Fiona Sharman, teacher-librarian at the school, ran several research workshops comprising strategic search, note-taking and referencing.

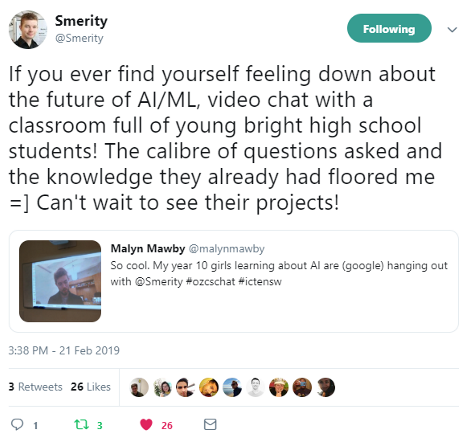

The class was also privileged to have an online video conversation with, Stephen Merity. Smerity, as he prefers to be called, works in the field as well as writing and speaking about it. In as plain English as possible, he explained what AI is about, emerging trends, and the need to consider impacts and contexts. Smerity talked about the impact on researchers and consequently development of the technology. His explanation of ‘dual use technology’ further increased the relevance of this task, that is, exploring what is probable and preferred.

What is probable?

The purpose of the case study was to hone into particular applications of AI to facilitate analysis of the technology’s impact on individuals and society. The plan was not to generalise but rather, in specificity, get a better understanding of the issues at play when using or developing emerging technologies. Issues ranged from legal, social and ethical implications. Focusing on what was already or almost happening, made it (slightly) easier to imagine what could happen. A more involved research task would look further into varying levels of likelihood but that would be beyond the current scope and capabilities.

The class had to depict this probable world using multimedia.

What is preferred?

As the Australian Curriculum states, and as Smerity pointed out, what is preferred can vary depending on context. Computing students often find it difficult to take on perspectives other than their own. Quite frankly, this is not limited to students which is why ‘You are not your user’ has so much traction in the IT industry.

In this task, students had to choose a fictional Marvel universe character to frame their preferences. The character may be the developer and/or user of the AI; these are the two major perspectives on technology within the IST course. Given more time, it would have been good to stipulate having both hero and villain perspectives. Nevertheless, this task component forced students to take on a different persona with which to create a justified conclusion on whether or not to further develop or use the technology.

Key resources

The PBL framework – Discover-Create-Share – used for this project is derived from the work of Bianca and Lee Hewes. It is a simple yet powerful framework for designing PBL units.

Computing Technology: A project-based approach – This textbook by David Grover et al. explains in accessible language other core content such as explanations of issues and overview of AI.

Really good primers on AI and its impacts:

- video of Genevieve Bell’s talk on AI: Making a Human Connection

- Harvard Business Review podcast with Kathryn Hume: Designing AI to Make Decisions.

Reach out to Smerity on Twitter, or for more in-depth information, visit his blog. He is very good at explaining technical concepts and, more importantly, is an advocate for making IT a tool for creating a better world.

The high-level PBL framework and the more detailed Assessment task notification are available in PDF. Note: The task notification could perhaps be reframed to ‘possible-probable-preferred’ to make it easier to understand. Some students said it was a bit confusing.

Planned outcomes

The PBL was a summative task with the following assessable outcomes for reporting:

5.3.1 Justifies responsible practices and ethical use of information and software technology

5.3.2 Acquires and manipulates data and information in an ethical manner

Assessable products generated by the students comprise a written case study report and a multimedia depiction of the probable future AI world.

The project ran for just over five weeks. Incorporated into the task are metacognitive reflections on the research process as well as project management. Focusing on select sub-processes highlights the fact that these complex skills are made up of multiple components and, speaking practically, reduce assessment marking load.

Another relatively small component of the task was the Annotated bibliography. This provided a contextual application of data quality: accuracy, currency, relevance, and integrity.

Tangential outcomes

Not all project outcomes were planned or assessed. These are some examples:

- Outcomes 5.1.1 and 5.1.2 on choice of software and hardware to create products: these were treated as Assessment for Learning (AfL), rather than summative. This allowed students to focus on actual outcomes being assessed, AI and Ethics, which were substantial enough.

- Connections with other topics in the course: for example, Smerity talked about modelling and simulation, algorithms, and careers. Furthermore, Machine learning involving huge data sets connected with database design and data-handling content.

- The value of opening classroom doors to experts, be it research skills or subject content: this is crucial in studying technologies.

Serendipitous outcomes

These outcomes were not planned but turned out to be incredibly empowering experiences for students and teachers involved.

- The opportunity to have an even broader audience to share our story by contributing here in the DT Hub.

- Just after students submitted their projects, the Australian Government Department of Industry, Innovation and Science sent out an invitation for consultation on Artificial Intelligence: Australia’s Ethics Framework. This is an emphatic validation of the task and, really, the Digital Technologies curriculum.

Student projects

These are the students and what they explored in brief. They take pride in their case studies and depiction of future AI. The girls have learned so much about the technology and, crucially, its impact on individuals and society.

- Ana – Autonomous self-driving cars

Autonomous vehicles take in information about their surroundings and use this to operate with little human help. Users built into the vehicle assist the car in understanding its environment and steer the car in most situations on the road. It can change lanes, manage the speed, control braking and detect obstacles.

- Charli – Deepfake videos

Deepfake is an AI technology that learns through training to synthesise subjects in images so it can make a video combining the movements of one subject to the appearance of another. Deepfake works by a deep neural network learning to take an input (face/body), compress it down, then regenerate it to fit into a second subject’s movement.

The following girls collaborated to create one video incorporating all their chosen technology.

- Isabella – DeepLocker and evasive malware

Cybersecurity is constantly advancing to keep up with the capabilities and vulnerabilities of technologies. AI has grown in the cybersecurity sector looking at the dual use of some elements to create highly targeted and evasive pieces of malware. IBM’s DeepLocker camouflaged the malware WannaCry within a video conferencing application classed as benign by antivirus systems. IBM used facial recognition as the trigger condition to execute malicious payload.

- Sophie – Facial biometrics for passport identification

Facial biometrics for passport identification is increasingly used in airports globally. Examples include ‘Smart Gates’ and ‘EPassport Gates’. It uses facial recognition to match the face to the given passport picture using facial biometrics.

- Archisha – Deception Analysis and Reasoning Engine (DARE)

DARE is an AI system that can detect if a person is telling the truth. The technology is trained to identify and classify human micro-expressions, such as ‘lips protruded’, ‘eyebrows frown’ and ‘eye blinks’, along with analysing vocal patterns and inconsistencies, to signify whether someone is lying or not.

- Alexandra – SmartVis facial recognition

SmartVis, a live facial recognition-based AI, was developed by Digital Barriers for the purpose of detection, recognising and tracking of people from a targeted list such as criminals or missing persons. It is described as ‘software solutions and cloud services to manage the capture, analysis and streaming of live video, enabling actionable video intelligence to be delivered where it is needed, when it is needed.’

Reflections on the project

Delving into what is possible, probable and preferred with regards to AI proved to be an unforgettable teaching and learning experience. The discipline required for a proper case study coupled with a complex topic made it challenging for both teacher and students alike. Student reflections suggest a deeper understanding of the overarching idea as well as a burgeoning curiosity on how it can come to be.

Here are some examples of student reflections indicative of what they have learned (PDF provided for more):

‘I used to think AI was just a mystical thing that I heard about in movies and in the media and I don’t understand; now I think that AI is an extremely interesting and complicated area of technology that has so much potential to do good and harm.’

‘I used to think that working alone is easier than working in groups; now I think that working in groups allows for a further understanding of topics, and I might even learn more because I learn off other people’s knowledge in the group and their topics.’

‘… I found that looking at the effects of AI’s development from our perspective and the perspective of an industry professional was very beneficial because it presented us with the skills that we will require in the future no matter whether we work in the technology industry or not.’

‘… I personally take a deep interest in politics and through our focus on the effects of AI in both class discussions and the assessment, I found … a deeper and more extensive understanding and ability to understand the effects of technology on society.’

‘A new idea I had that extended my thinking is that technology can revolutionise society … Hearing about what the industry professional was working on I found very interested in learning about how predictable some human patterns can be …’

‘… start wondering about possible laws and legal issues that might happen as a result of AI.’

‘Who will actually be making the decision on where to “draw the line” metaphorically when it comes to invasion of privacy and will it be the same globally or will each country have different laws?’

‘… I would like to have a larger understanding of the back-end of AI (the programming).’

Image credit: Screenshot from Twitter/@Smerity

This entire experience can be mined for future topics that include modelling and simulation, software design and programming, and automated systems.

Tips and advice

Embarking on a complex topic such as artificial intelligence can be daunting. Here are some tips to help:

- Find connections with what you know. Focusing on issues, which is still core content, made this foray manageable.

- Invest time for workshops on explicit teaching of research and project management skills.

- Accept that you are not an expert. Open your classroom and bring experts in.

- Encourage students to choose topics they are personally interested in. This facilitates deeper inquiry, creation of good quality products, and motivation when the going gets tough.

- Encourage students to submit drafts. This facilitates discussions and underscores the iterative nature of designing solutions, digital or otherwise. For larger classes, be strategic on what to give feedback on to keep the workload manageable.

- Be strategic with outcomes and marking. Ongoing discussions on student projects and reading case studies on complex applications carry with it a bigger cognitive load.

- For this particular project, do some simple variations to open it up to other assessable outcomes such as communication and collaboration, for example:

- pair students up and get them to choose opposing sides (5.5.1)

- get students to create a pitch to a sci-fi movie producer to feature chosen technology, where heroes use it for good and villains use it for evil. (5.5.2)

Resources

- Student reflections

- Ana (Autonomous self-driving cars) - Case study

- Ana (Autonomous self-driving cars) - Poster

- Charli (Deepfake videos) - Case study

- Isabella (DeepLocker and evasive malware) - Case study

- Isabella (DeepLocker and evasive malware) - Web article

- Sophie (Facial biometrics for passport identification) - Case study

- Archisha (Deception Analysis and Reasoning Engine (DARE)) - Case study

- Alexandra (SmartVis facial recognition) - Case study

My school

Roseville College is a non-selective independent Anglican day school for girls, from Kindergarten to Year 12. It is a member school of the Sydney Anglican Schools Corporation and is located in the suburb of Roseville, NSW.

Roseville College offers IST mainly as a 200-hour course; although, it can also be taken as a 100-hour course. There are six girls in this current Year 10 IST class; five carried on from Year 9 last year and one girl is new to the school and has not previously studied IST. The school also offers the HSC Software Design and Development course and is committed to raising the profile of careers in STEM for women.