Can AI guess your emotion?

About this lesson

Discuss emotions as a class, and introduce the idea of artificial intelligence (AI). This lesson can also be used to introduce image classification – a key application of AI. Developed in collaboration with Digital Technologies Institute.

Year band: Foundation, 1-2, 3-4

Curriculum Links AssessmentCurriculum Links

Links with Digital Technologies Curriculum Area.

| Year | Content Description |

|---|---|

|

F |

Recognise and explore digital systems (hardware and software) for a purpose (AC9TDIFK01) Represent data as objects, pictures and symbols (AC9TDIFK02) |

|

1-2 |

Identify and explore digital systems and their components for a purpose (AC9TDI2K01) Discuss how existing digital systems satisfy identified needs for known users (AC9TDI2P03) Represent data as pictures, symbols, numbers and words (AC9TDI2K02) |

| 3-4 |

Recognise different types of data and explore how the same data can be represented differently depending on the purpose (AC9TDI4K03) Explore and describe a range of digital systems and their peripherals for a variety of purposes (AC9TDI4K01) Explore transmitting different types of data between digital systems (AC9TDI4K02) Discuss how existing and student solutions satisfy the design criteria and user stories (AC9TDI4P05) Discuss how existing and student solutions satisfy the design criteria and user stories (AC9TDI4P05) Define problems with given design criteria and by co-creating user stories (AC9TDI4P01) Follow and describe algorithms involving sequencing, comparison operators (branching) and iteration (AC9TDI4P02) |

| Year | Content Description |

|---|---|

| Foundation |

Practise personal and social skills to interact respectfully with others (AC9HPFP02) Identify health symbols, messages and strategies in their community that support their health and safety (AC9HPFP06) |

| 1–2 |

Identify and explore skills and strategies to develop respectful relationships (AC9HP2P02) Identify how different situations influence emotional responses (AC9HP2P03) |

| 3–4 |

Explain how and why emotional responses can vary and practise strategies to manage their emotions (AC9HP4P06) |

|

Typically, by the end of Year 2, students:

Typically, by the end of Year 4, students:

|

Assessment

Teacher assessment

Below are some assessment approaches and tasks. Choose the ones that will best suit your students.

| Possible tasks |

|---|

|

Draw a labelled diagram of the AI in action. Identify the digital device used, the input and outputs.

|

|

How well did the AI recognise your group’s emotions? Use screen captures to help to describe what happened. Give the AI a star rating; for example:

|

|

Describe the steps you used to train your AI to recognise emotion. This can be conducted as a student interview (The Think aloud page on the 'Guides and resources' page). |

|

How might this type of AI be used in our daily lives? How does a smartphone use facial recognition to unlock? Can it be fooled? Why is this type of AI useful? |

Preliminary notes

Familiarise yourself with the Teachable machine application. View the supporting videos.

Note: Teachable machine requires Google Chrome on Windows or Macintosh (tablets are not supported) and a webcam.

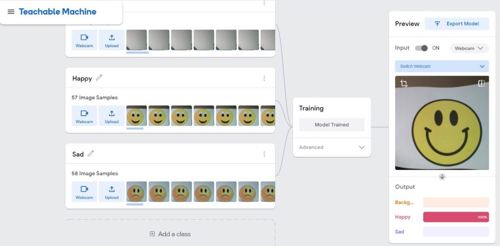

Image 1: Teachable Machine Application Happy/Sad project screenshot

The image above shows the view of a project created in the Teachable machine AI application. On the left, the classes are shown Background, Happy and Sad. The preview on the left shows the model being tested using a smiley emoji. The AI recognises the emoji as ‘Happy’ (100%) shown as a complete red bar.

Safety warning

Privacy and personal information: Discuss the potential misuse of personal images when uploading images of ourselves or friends on websites. Instruct students not to record images of themselves or others via the webcam or uploading images.

The conditions of use for Teachable machine state that images are not stored on external servers if the teachable machine program is closed when completed and the project is not saved. If students close the tab, nothing is saved in their browser or on any servers.

Learning map and outcomes

By the end of this lesson students will:

- Describe the key facial features that help us recognise a person’s emotions.

- Draw and recognise different faces showing an emotion such as sad, happy or angry.

- Train an Artificial Intelligence (AI) to recognise an emoji as happy or sad.

- (In a follow up lesson) Create their own AI model to recognise images (eg a pet, an Australian animal or a number from 1 to 10).

Learning hook

Unplugged activity

-

Use a suitable hook to discuss emotions. Why is it important that we recognise someone’s emotions?

Write or draw some emotions on cards, one emotion per card. Give a student a card to act out. Then ask the class to guess the emotion. Alternatively, show character images from a picture book, and have students guess the emotions.

Ask the class: What tells us that a person is happy, sad, angry or surprised? What is a neutral expression?

-

As a class, create a table of information to look for patterns in the data.

Example table (yours may be different)

Emotion Features Happy - Smiling

- Raised eyebrows

- Eyes wide open

- Puffy cheeks

- Teeth showing

- Lips facing up

Sad - Eyebrows pinched

- Eyes partly open

- Lips facing down

Angry - Eyebrows scrunched up

- Nose pinched

- Teeth clenched

-

Compare your descriptions of features to emojis or emoticons. How do your descriptions compare? What features are used to convey each emotion?

This activity can be done as a teacher-led activity, or adapted as a hands-on activity to suit a range of skills. Students can begin at Level 1 and demonstrate understanding at each level or start at a selected level.

The level of difficulty could be increased as follows and presented using a gaming analogy. When explaining the task use a combination of verbal and visuals.

The activity has been levelled to enable differentiation.

Level 1: Cut and sort emoji sorting

You may wish to have students do a sorting activity where they have different emoji pictures that they need to cut out and sort into categories.

A sample ‘Sort the emojis’ handout in Word format can be downloaded here.

Guess the hand-drawn emoji

Have each student create an ‘emoji quiz’ by having them draw out several emojis on a piece of paper and include a word bank. Students then switch papers and try to guess the emotion for each emoji. Students switch back and grade the paper.

A sample ‘emoji quiz’ handout in Word format can be downloaded here.

Comparing differences

Have students identify differences between a pair of emojis expressing the same general emotion. For example, show two different versions of a sad face emoji and have students find the differences in the two images (e.g. ‘one has a tear next to its eye’). Also have students decide which of the two images is ‘more’ of that emotion (e.g. ‘more sad’).

A sample ‘More or less emojis’ handout in Word format can be downloaded here.

Learning input

- Explain that computers can be programmed to be intelligent, or at least smart. Ask the class if they think a computer could guess their emotions? Could the computer work out if they are happy, sad or angry? Explain that instead of using an image of ourselves we will use an emoji. Briefly explain issues around privacy and protection when uploading images of themselves to the internet.

- Use the tool Teachable machine. This tool lets you train up an AI application – without having to code – to recognise inputs and match them each to a particular output. Use this pre-made model to test the AI to see how well it recognises a happy or sad emoji. (You will need a device with a webcam).

-

Model how to create, train and test an AI model that can recognise happy and sad. Refer to this video explanation or follow these steps:

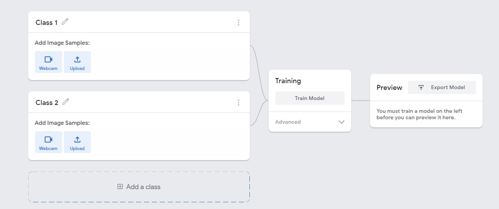

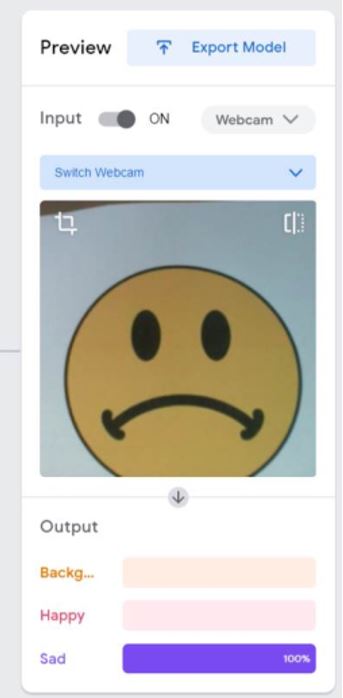

Image 2: Teachable Machine Application screenshot

- Create one class as the background which is the view of the webcam. To do this edit ‘Class 1’ and label it as ‘Background’. Record images using the webcam.

- Next edit ‘Class 2’ and label as ‘Happy’. Record images of smiley emojis. Use printed images or student drawn emojis held in front of the webcam.

- Finally add a Class, edit ‘Class 3’ and label it as ‘Sad’. Record images of sad emojis. Use printed images or student drawn emojis held in front of the webcam.

- Train the model, then test it using happy and sad emojis. Discuss how well the AI recognised the happy and sad emojis.

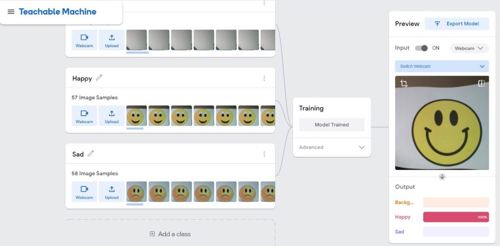

- The completed project will look something like this:

Image 3: Teachable Machine Application Happy/Sad project screenshot

Learning construction

- Provide students with the opportunity to create their own AI model using Teachable Machine.

- Students may add a further class to the ‘Happy and Sad’ model. For example students may add an additional class such as surprised or angry. Students demonstrate their model in action.

Learning demo

- Students may come up with their own training data and project to test the Teachable Machine AI further. Here are some prompts:

- Can the AI guess your pet type?

- Can the AI guess the Australian animal?

- Can the AI guess the number from 1 to 10?

- Can the AI work out what word you have written?

- Discuss the training data required for their project. What data will the need and where will they get it from? For example if recognising pets; where will they source images? How many images of each pet type will they need? What labels will they use? What images will they use for testing?

- Ask students to draw a quick plan of their AI model. This plan should show the classes and describe the images needed.

Reflection

-

Talk about the bar on the interface, which displays the AI’s confidence level.

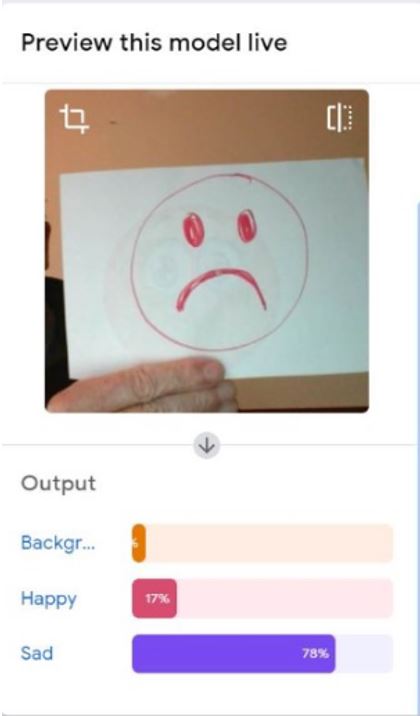

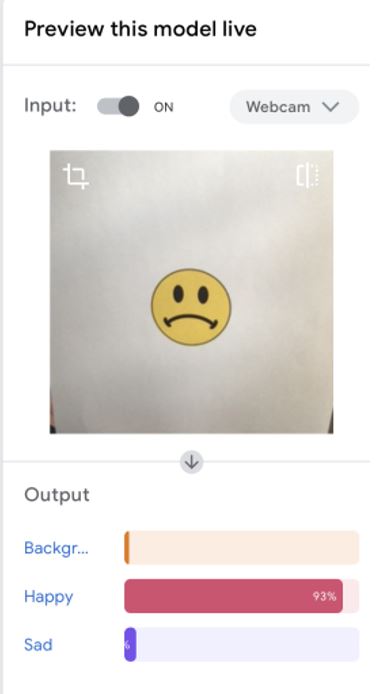

For younger students, discuss the scale. If the bar is fully coloured, the AI is very sure. If the bar is only partly coloured, the AI is not quite sure. Here’s an example from our Happy/Sad AI model:

Correct: 100% sure Correct: quite sure Incorrect

The model is working as expected. The emoji takes up the whole screen as it was trained. The view includes the background and a smaller view of the emoji. The model is not working as expected. The emoji is small and does not take up the whole screen. The model is showing a bias based on size as it was trained on full screen images. Retraining would include images of different sized emojis. Discuss:

- When was the AI very sure of its guess?

- When was it not so sure?

- A bias may be experienced if the data used does not contain a diverse range. Examples may include one size, one type of image etc.

Why is this relevant?

AI is the ability of machines to mimic human capabilities in a way that we would consider 'smart'.

Machine learning is an application of AI. With machine learning, we give the machine lots of examples of data, demonstrating what we would like it to do so that it can figure out how to achieve a goal on its own. The machine learns and adapts its strategy to achieve the goal.

In our example, we are feeding the machine images of emojis via the inbuilt camera. The more varied the data we provide, the more likely the AI will correctly classify the input as the appropriate emotion. In machine learning, the system will give a confidence value; in this case, a percentage and the bar filled or partially filled, represented by colour. The confidence value provides us with an indication of how sure the AI is of its classification.

This lesson focuses on the concept of classification. Classification is a learning technique used to group data based on attributes or features.